by Jeff Berardelli: If you’ve ever engaged in a discussion about climate change…

in person or online, you’ve probably encountered some arguments about what the science says. Some of those claims may sound logical but are actually misleading or inaccurate.

In fact, misconceptions and outright misinformation have gotten so out of hand, just days ago NASA felt the need to publicly address one of the most popular myths: that a decrease in the the sun’s output will soon trigger cooling and a mini ice age.

This and other topics have been studied thoroughly and debunked over and over again by climate scientists. Nevertheless these myths persist, often as a result of an organized disinformation campaign waged by special interests whose goal is to raise doubts among the public and delay action on human-caused climate change.

Here is a look at 10 of the most common myths about climate change that persist in the public sphere and what science has to say about them.

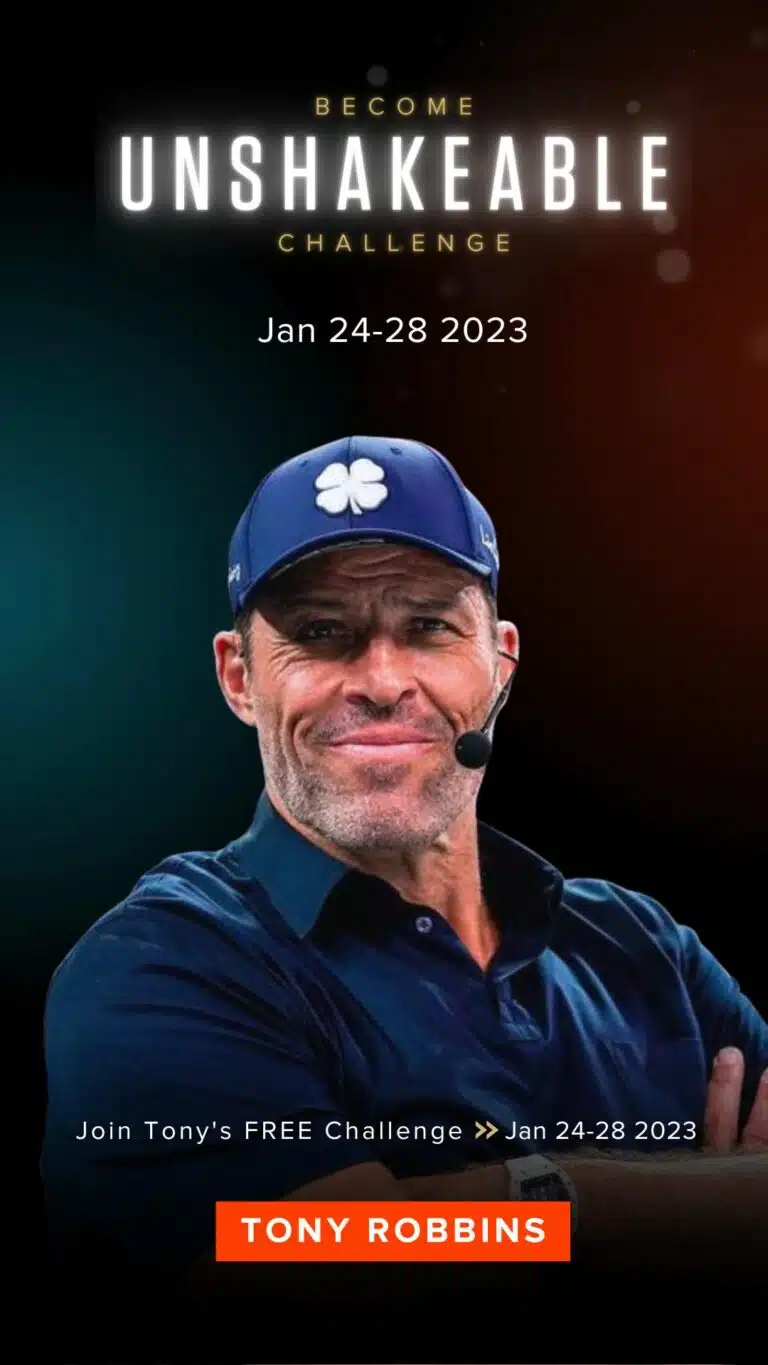

Myth #1: It’s the sun.

While it is true that varying intensity of energy from the sun has driven long-term climate changes like ice ages in the distant past, the sun can not explain the recent spike in warming.

Over tens and hundreds of thousands of years, the Earth’s tilt and orbit around the sun varies in predictable cycles. The way these cycles interact with each other cause gradual increases or decreases in the energy from the sun reaching the Earth. That change in energy can gradually — over thousands of years — ease the Earth into and out of ice age cycles. Over about the past 800,000 years, these ice/melt cycles have occurred about every 100,000 years.

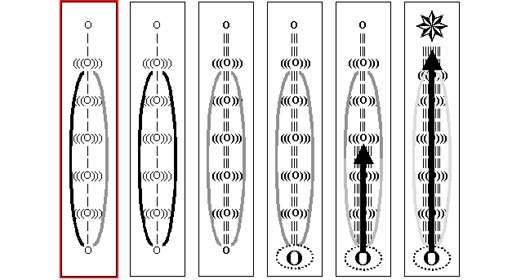

But the pace of the recent temperature spike has been markedly faster — taking place over 150 years, with the majority happening over just the past few decades. At that same time, the sun’s output has been going in the opposite direction, diverging from the direction in temperature. As this NASA graph shows, solar irradiance is down slightly from a peak in the 1950s.

In fact, according to NASA, in late 2020 the current solar cycle is headed for its lowest level since 1750, meaning the lowest energy output from the sun in 270 years. Still, that change in output is minor, having varied by only 0.1% since 1750.

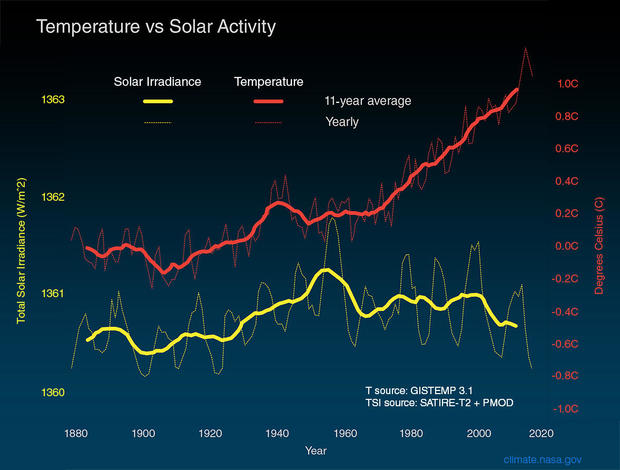

Myth #2: Carbon dioxide levels are tiny. They can’t make a difference.

It’s true, carbon dioxide (CO2) makes up a tiny fraction of the atmosphere, less than a tenth of a percent. But because of CO2’s powerful heat-trapping greenhouse properties, its presence makes a huge difference. Currently, CO2 levels keep Earth’s temperature at a comfortable average of nearly 60 degrees Fahrenheit. As shown in the below animation, if CO2 abruptly dropped to zero, Earth’s average temperature would also drop far below freezing, eradicating most life as we know it.

To be clear, a drop in CO2 wouldn’t directly cause the whole drop in temperature. The biggest impact comes from the most abundant greenhouse gas, water vapor, which condenses out due to the fact that colder air holds less water vapor; this is what tanks the greenhouse effect in the simulation. Positive feedbacks like the growth of ice cover would further precipitate the temperature plunge. But it’s CO2 which drives all this change.

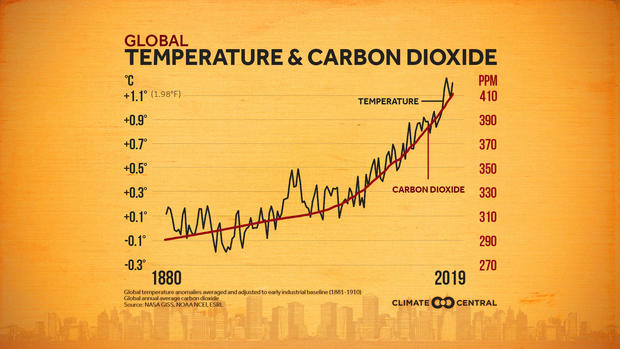

Because small concentrations of carbon dioxide have an outsized impact, scientists are very concerned about the recent unprecedented rate of increase. For the vast majority of the past million years, CO2 levels have been below 280 parts per million. Since the industrial revolution of the 1800s, levels have jumped to 415 parts per million — an astounding 35% increase in 150 years.

As the below graph shows, that dramatic increase in carbon dioxide levels coincides with the rapid warming.

Myth #3: Scientists disagree on the cause of climate change.

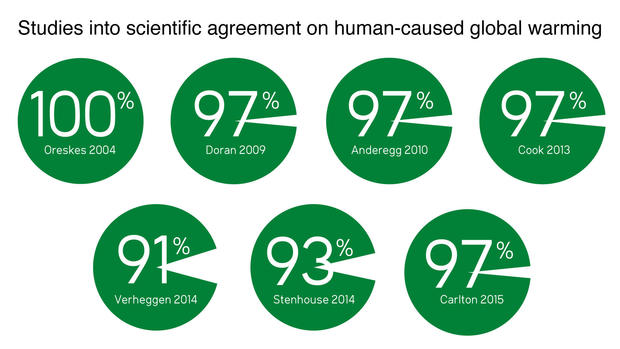

Contrary to popular belief, scientists do not disagree that climate change is happening and that it is caused by humans. Various analyses over many years have shown that between 90% and 100% of publishing climate scientists agree that humans are the main cause of our warming climate. Many studies have evaluated the scientific consensus, but the most famous, which as of this summer has been downloaded 1 million times, is this 2013 paper quantifying that agreement at over 97%.

According to NASA, “Multiple studies published in peer-reviewed scientific journals show that 97 percent or more of actively publishing climate scientists agree: Climate-warming trends over the past century are extremely likely due to human activities. In addition, most of the leading scientific organizations worldwide have issued public statements endorsing this position.”

For perspective, NASA Goddard Institute climate scientist Kate Marvel put this consensus into relatable terms, “We are more sure that greenhouse gas is causing climate change than we are that smoking causes cancer.”

Despite this scientific consensus, only 1 in 5 Americans understand that almost all climate scientists agree that climate change is real and caused by humans. That is called the “Consensus Gap.”

Myth #4: The climate has always changed. It’s natural.

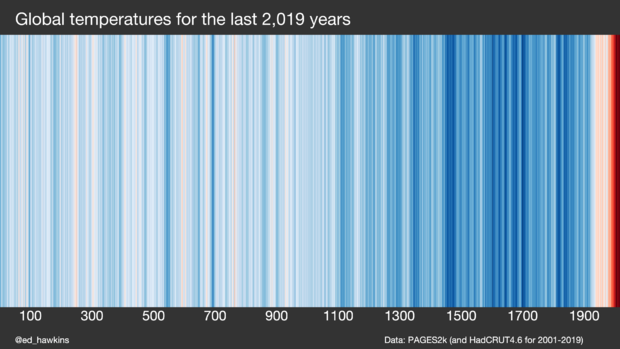

No scientist will disagree that the climate changes naturally. It always has and it always will. What makes the recent changes stand out is the unprecedented pace of change.

Because “the present anthropogenic (human-caused) carbon release rate is unprecedented during the past 66 million years,” as scientists concluded in a 2016 study in Nature Geoscience, the rate of temperature rise is 10 times faster than that of the last mass extinction about 56 million years ago.

Science has a firm handle on the various reasons why the climate changes naturally. Two examples are long-term fluctuations in sunlight due to changes in Earth’s orbit, which modulate ice ages, and shorter-term release of sun-dimming ash from large volcanoes, like Mount Pinatubo, which cooled Earth’s surface by 1 degree Fahrenheit in 2001.

None of these natural changes can explain the spike in heating since the 1800s. In contrast, physics calculates that most of the recent warming stems from heat-trapping greenhouse gases released by the burning of fossil fuels. According to climate scientist and data analyst Dr. Zeke Hausfather, “Our best estimate is that 100% of the warming the world has experienced is due to human activities. Natural factors — changes in solar output and volcanoes — would have led to slight cooling over the past 50 years.”

Here’s a brief video showing the different natural and human-caused factors which factor into temperature changes.

Myth #5: It’s cold out. What happened to global warming?

It should be obvious that Earth as a whole can warm up and at the same time certain parts of the Earth can feel cold. Yet cold weather is common cited as evidence against climate change — both sincerely and, by some, disingenuously. Famously, in 2015, Senator Jim Inhofe of Oklahoma held up a snowball on the Senate floor on a cold winter day to deny the existence of climate change.

At issue here is the difference between weather and climate. When scientists use the term global warming, or climate change, it refers to a broad temperature shift across the entire Earth’s surface over the course of years and decades. The term weather, on the other hand, is the short-term, sometimes abrupt day-to-day variation in any given location. A good way to think about it is: Weather is your mood; Climate is your personality. Global warming does not prohibit cold, it just makes extreme cold less intense and less likely. Winter is still winter, it’s just not as wintery overall.

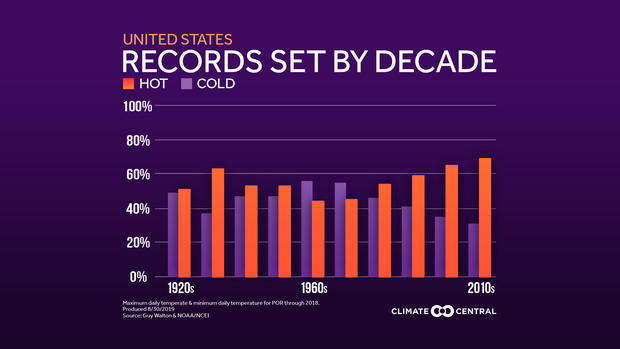

This is illustrated below. In recent decades, the ratio of record highs compared to record lows in the United States (and globally) is increasing, averaging approximately two record highs to every one record low. The duration of winter cold snaps is also decreasing, but of course cold air still exists.

Myth #6: In the 1970s scientists warned about a coming ice age. They were wrong. So why should we believe them now?

If you were of age in the 1970s you might remember a number alarming newspaper headlines warning of an ice age on the way. But a deeper dive reveals those articles were based on a small number of papers very much in the scientific minority.

In the mid 20th century, climate science was very much in its infancy — scientists were just learning to decipher the influence of competing forces regulating climate. In the 1960s and 1970s, the science began to mature as researchers unearthed the most prominent factors such as the cooling influence of aerosols and the warming influence of greenhouse gases like carbon dioxide — concepts which have stood up to decades of rigorous testing.

Yet even at that early stage, a scientific consensus was emerging on warming, not cooling, in the near future. This was made clear by a 2008 study called “The Myth of the 1970s Global Cooling Scientific Consensus,” which conducted a survey of the peer-reviewed literature from 1965 to 1979. The research team found that of the 71 related research papers, 44 indicated warming while only 7 indicated cooling (20 did not make projections either way). “Global cooling was never more than a minor aspect of the scientific climate change literature of the era, let alone the scientific consensus,” the authors write.

So why then was there such an outsized influence in the social consciousness from these few cooling papers? For one thing, the paper suggests, ice ages make for very compelling and memorable headlines. But those stories often included contradictory evidence as well, and other news coverage at the time did focus on warming theories.

Selecting and highlighting past inaccuracies in science, even if they are the exception and not the rule, is an expedient way for politicians and opponents of climate action to sow doubt about the credibility of climate science.

In short, while a handful of scientists did predict cooling a half a century ago, that is a drop in the bucket compared to the tens of thousands of peer-reviewed scientific papers since then which substantiate that humans are heating the climate.

Myth #7: The temperature record is rigged or unreliable.

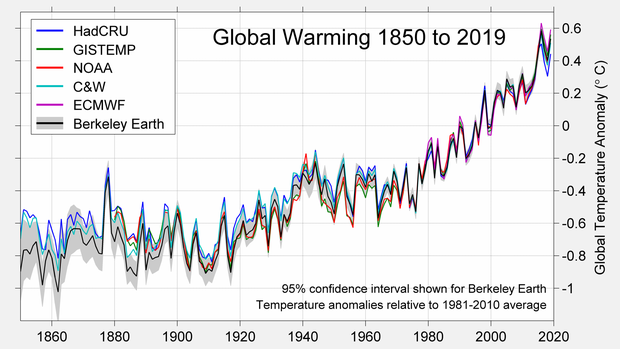

A common talking point among climate change skeptics is either “the temperature record is unreliable” or “the temperature record is rigged.” That might be a plausible argument if all of science relied on just one or two records; however, there are many independent temperature records produced by various independent bodies worldwide, and their data are remarkably consistent with each other.

These organizations include NASA, NOAA, the UK Meteorological Service, the Japanese Meteorological Service and the European Centre for Medium-Range Weather Forecasts, just to name a few.

One of the variables they have to account for is a phenomenon called the Urban Heat Island effect. Simply put, large cities — which are expanding — heat up the local atmosphere due to the concentration of dark surfaces, buildings and industries releasing heat. The concern is this extra heat may “contaminate” surface temperature trends. Scientists have studied this phenomena thoroughly and the surprising conclusion is that the warming trend in the temperature record of urban sites, in general, is similar to rural sites. So the urban heat island effect is real but not very substantial.

The temperature records are carefully fine-tuned by data experts to account for factors including the urban heat island effect, instrument sites being relocated, and instrument type changes. While each organization has its own unique methods for data gathering and analysis, the resulting temperature records are largely in sync.

Myth #8: Climate models are not accurate.

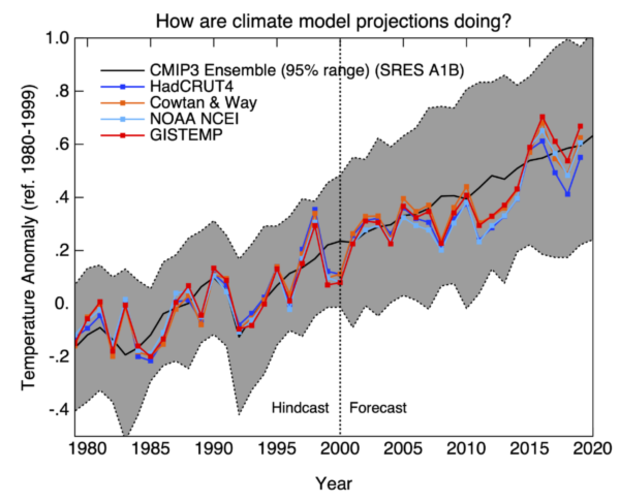

Considering how complex modeling the climate is, most model projections of future temperature, even the rather primitive climate computer models of the 1970s, 80s and 90s, were impressively accurate. This lends extra credibility to the much more advanced climate models of today in predicting future changes.

A recent study evaluated 17 climate model projections published between 1970 and 2007, with forecasts ending on or before 2017. The researchers found 14 of the 17 model projections were consistent with observed real-world surface temperatures, when they factored in the actual rise in greenhouse gas emissions. Here’s the assessment of the lead scientist on the study, Dr. Zeke Hausfather: “Climate models have by and large gotten things right.”

Dr. Gavin Schmitt, the head of NASA Goddard Institute for Space Studies, posted an even more recent assessment of the state-of-the-art collection of climate models run in the early 2000s called CMIP3. He concluded, “The CMIP3 simulations continue to be spot on (remarkably), with the trend in the multi-model ensemble mean effectively indistinguishable from the trends in the observations.”

In the graph below, the model projection is the black line and the colored lines are the actual temperature datasets from various agencies. As you can see, the magnitude and pace of temperature change consistently match.

To be sure, evaluating global temperature projections are not the only gauge of a model’s accuracy. Models can be expected to be accurate on general trends, such as whether global temperatures will warm, overall rainfall increase or hurricanes get stronger. However, when it comes to predicting regional changes and other specific types of events, the climate models are far from perfect. Future projections like whether rainfall will increase or decrease in San Francisco, or whether more or fewer hurricanes will hit Florida, are still uncertain and on the edge of climate models’ current ability.

Myth #9: Grand Solar Minimum is coming. It will counteract global warming.

Many scientists speculate that we are now entering the beginning of a Grand Solar Minimum — a period with decreased solar energy which could last a few decades. There is a general acknowledgement that this speculation may be true, but there is a lack of scientific consensus because of limited understanding of longer-term solar cycles.

If this happens it certainly would not be the first time. The most famous Grand Solar Minimum, called the Maunder Minimum, spanned from 1645 to 1715. The period indeed corresponds with a decrease in temperature, but was embedded in a much longer-term cooling period called the Little Ice Age (from about the 1300s through the mid 1800s). While it seems logical to assume the cooling during the Little Ice Age may have been due to a decrease in solar activity, leading theories actually point more so to volcanic activity.

With that said, a scientific collaboration to reconstruct past temperatures, called PAGES2K, indicates that global average temperatures decreased by no more than a couple of tenths of a degree Celsius during the Maunder Minimum. During that time the solar irradiance decreased by one-quarter of one percent.

Several studies have been conducted on the potential impact of a Grand Solar Minimum in the coming decades. The consensus of these studies finds that global average temperatures would decrease by no more than around half a degree Fahrenheit, but likely less. In contrast, human-caused climate change has already warmed the planet by 2 degrees Fahrenheit since the late 1800s, and climate scientists forecast we could see about 4 degrees Fahrenheit of additional warming by 2100.

So, while a Grand Solar Minimum is possible, our best science tells us it would do nothing more than make a small dent in the overall warming trend. According to NASA, that amount of cooling would be balanced by just three years of greenhouse gas emissions and the warming caused by greenhouse gas emissions from the human burning of fossil fuels is six times greater than a possible decades-long cooling from a prolonged Grand Solar Minimum. And any impact of cooling would be short-lived, with temperatures bouncing right back after the minimum ends.

It’s also worth mentioning that during the Maunder Minimum certain regions, like Europe, cooled more than others. If this reoccurs during the next minimum, regional cooling may be slightly more impactful on those given regions, but it would still pale in comparison to the amplitude of warming from human-caused climate change.

Myth #10: Scientists claim climate change will destroy the planet by 2030.

Climate scientists are often accused of making alarming assertions about climate change, like “the impacts will be catastrophic by 2030” or “we only have a decade left to save the planet.” First and foremost, scientists are not predicting this. However, some politicians and media headlines have used select bits of scientific data to fuel the impression of impending Armageddon.

This specific myth comes directly from a quote in the 2018 Special Report produced by the United Nations Intergovernmental Panel on Climate Change (IPCC). Here’s what the quote actually says:

“The report finds that limiting global warming to 1.5°C would require ‘rapid and far-reaching’ transitions in land, energy, industry, buildings, transport, and cities. Global net human-caused emissions of carbon dioxide (CO2) would need to fall by about 45 percent from 2010 levels by 2030, reaching ‘net zero’ around 2050.”

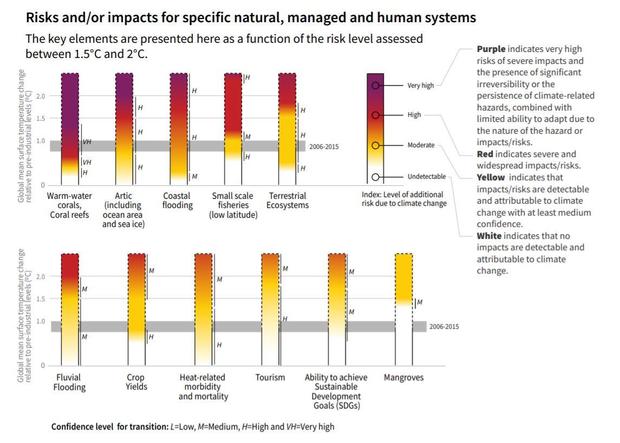

To simplify, the report concludes that if the global community wants to avoid breaching the threshold of 1.5 degrees Celsius of warming, then we need to abruptly cut emissions by rapidly reducing the use of fossil fuels by the end of the decade. At this point the globe has already warmed by slightly more than 1 degree Celsius; the vast majority of scientists agree there is little to no chance that warming will be held below 1.5 degrees.

Staying below 1.5 degrees Celsius of warming is a goal often cited as the limit needed to prevent the most severe consequences from climate change. But 1.5 degrees is not a magical cut-off point. There is no bright line separating “normal” from “catastrophic.” Rather, the impacts of global warming get progressively worse as temperatures incrementally rise. Some have already started.

One lead author of the IPCC report, Hans-Otto Pörtner, said, “Every extra bit of warming matters, especially since warming of 1.5°C or higher increases the risk associated with long-lasting or irreversible changes, such as the loss of some ecosystems.”

For people who live near sea level, like on low-lying Pacific islands, 1.5 degrees of warming will in fact be catastrophic, because it can mean the difference between an inhabitable and uninhabitable homeland due to sea-level rise. For others who are less vulnerable or have more resources, 1.5 degrees won’t have quite as drastic an impact.

The IPCC report lays out many other examples of the escalating damage produced by warming above 1.5 degrees. For instance, at that level of warming, it’s estimated that coral reefs will decline another 70% to 90%. If we hit 2 degrees Celsius of warming, the death toll for coral reefs jumps to 99%. This would not only be devastating to the aquatic species which rely directly on reefs, but also to millions of people worldwide who depend on the ecosystem for sustenance and business, as well as the web of life as a whole.

So, no, the world will not end in 10 years due to climate change. But the longer action is delayed, the more dire the consequences will be and the more likely it is that the changes will be irreversible.

The Skeptical Science website has compiled an exhaustive list of common myths and misconceptions about human-caused climate change, each complemented by peer-reviewed scientific research to illuminate the topics.