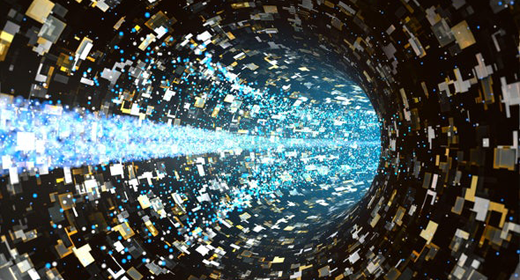

The setup of lasers and mirrors effectively “solved” a problem far too complicated for even the largest traditional computer system.

For the first time, a quantum computer made from photons—particles of light—has outperformed even the fastest classical supercomputers.

For the first time, a quantum computer made from photons—particles of light—has outperformed even the fastest classical supercomputers.

Physicists led by Chao-Yang Lu and Jian-Wei Pan of the University of Science and Technology of China (USTC) in Shanghai performed a technique called Gaussian boson sampling with their quantum computer, named Jiŭzhāng. The result, reported in the journal Science, was 76 detected photons—far above and beyond the previous record of five detected photons and the capabilities of classical supercomputers.

Unlike a traditional computer built from silicon processors, Jiŭzhāngis an elaborate tabletop setup of lasers, mirrors, prisms and photon detectors. It is not a universal computer that could one day send e-mails or store files, but it does demonstrate the potential of quantum computing.

Last year, Google captured headlines when its quantum computer Sycamore took roughly three minutes to do what would take a supercomputer three days (or 10,000 years, depending on your estimation method). In their paper, the USTC team estimates that it would take the Sunway TaihuLight, the third most powerful supercomputer in the world, a staggering 2.5 billion years to perform the same calculation as Jiŭzhāng.

This is only the second demonstration of quantum primacy, which is a term that describes the point at which a quantum computer exponentially outspeeds any classical one, effectively doing what would otherwise essentially be computationally impossible. It is not just proof of principle; there are also some hints that Gaussian boson sampling could have practical applications, such as solving specialized problems in quantum chemistry and math. More broadly, the ability to control photons as qubits is a prerequisite for any large-scale quantum internet. (A qubit is a quantum bit, analogous to the bits used to represent information in classical computing.)

“It was not obvious that this was going to happen,” says Scott Aaronson, a theoretical computer scientist now at the University of Texas at Austin who along with then-student Alex Arkhipov first outlined the basics of boson sampling in 2011. Boson sampling experiments were, for many years, stuck at around three to five detected photons, which is “a hell of a long way” from quantum primacy, according to Aaronson. “Scaling it up is hard,” he says. “Hats off to them.”

Over the past few years, quantum computing has risen from an obscurity to a multibillion dollar enterprise recognized for its potential impact on national security, the global economy and the foundations of physics and computer science. In 2019, the the U.S. National Quantum Initiative Act was signed into law to invest more than $1.2 billion in quantum technology over the next 10 years. The field has also garnered a fair amount of hype, with unrealistic timelines and bombastic claims about quantum computers making classical computers entirely obsolete.

This latest demonstration of quantum computing’s potential from the USTC group is critical because it differs dramatically from Google’s approach. Sycamore uses superconducting loops of metal to form qubits; in Jiŭzhāng, the photons themselves are the qubits. Independent corroboration that quantum computing principles can lead to primacy even on totally different hardware “gives us confidence that in the long term, eventually, useful quantum simulators and a fault-tolerant quantum computer will become feasible,” Lu says.

A LIGHT SAMPLING

Why do quantum computers have enormous potential? Consider the famous double-slit experiment, in which a photon is fired at a barrier with two slits, A and B. The photon does not go through A, or through B. Instead, the double-slit experiment shows that the photon exists in a “superposition,” or combination of possibilities, of having gone through both A and B. In theory, exploiting quantum properties like superposition allows quantum computers to achieve exponential speedups over their classical counterparts when applied to certain specific problems.

Physicists in the early 2000s were interested in exploiting the quantum properties of photons to make a quantum computer, in part because photons can act as qubits at room temperatures, so there is no need for the costly task of cooling one’s system to a few kelvins (about –455 degrees Fahrenheit) as with other quantum computing schemes. But it quickly became apparent that building a universal photonic quantum computer was infeasible. To even build a working quantum computer would require millions of lasers and other optical devices. As a result, quantum primacy with photons seemed out of reach.

Then, in 2011, Aaronson and Arkhipov introduced the concept of boson sampling, showing how it could be done with a limited quantum computer made from just a few lasers, mirrors, prisms and photon detectors. Suddenly, there was a path for photonic quantum computers to show that they could be faster than classical computers.

The setup for boson sampling is analogous to the toy called a bean machine, which is just a peg-studded board covered with a sheet of clear glass. Balls are dropped into the rows of pegs from the top. On their way down, they bounce off of the pegs and each other until they land in slots at the bottom. Simulating the distribution of balls in slots is relatively easy on a classical computer.

Instead of balls, boson sampling uses photons, and it replaces pegs with mirrors and prisms. Photons from the lasers bounce off of mirrors and through prisms until they land in a “slot” to be detected. Unlike the classical balls, the photon’s quantum properties lead to an exponentially increasing number of possible distributions.

The problem boson sampling solves is essentially “What is the distribution of photons?” Boson sampling is a quantum computer that solves itself by being the distribution of photons. Meanwhile, a classical computer has to figure out the distribution of photons by computing what’s called the “permanent” of a matrix. For an input of two photons, this is just a short calculation with a two-by-two array. But as the number of photonic inputs and detectors goes up, the size of the array grows, exponentially increasing the problem’s computational difficulty.

Last year the USTC group demonstrated boson sampling with 14 detected photons—hard for a laptop to compute, but easy for a supercomputer. To scale up to quantum primacy, they used a slightly different protocol, Gaussian boson sampling.

According to Christine Silberhorn, an quantum optics expert at the University of Paderborn in Germany and one of the co-developers of Gaussian boson sampling, the technique was designed to avoid the unreliable single photons used in Aaronson and Arkhipov’s “vanilla” boson sampling.

“I really wanted to make it practical,” she says “It’s a scheme which is specific to what you can do experimentally.”

Even so, she acknowledges that the USTC setup is dauntingly complicated. Jiŭzhāng begins with a laser that is split so it strikes 25 crystals made of potassium titanyl phosphate. After each crystal is hit, it reliably spits out two photons in opposite directions. The photons are then sent through 100 inputs, where they race through a track made of 300 prisms and 75 mirrors. Finally, the photons land in 100 slots where they are detected. Averaging over 200 seconds of runs, the USTC group detected about 43 photons per run. But in one run, they observed 76 photons—more than enough to justify their quantum primacy claim.

It is difficult to estimate just how much time would be needed for a supercomputer to solve a distribution with 76 detected photons—in large part because it is not exactly feasible to spend 2.5 billion years running a supercomputer to directly check it. Instead, the researchers extrapolate from the time it takes to classically calculate for smaller numbers of detected photons. At best, solving for 50 photons, the researchers claim, would take a supercomputer two days, which is far slower than the 200-second run time of Jiŭzhāng.

Boson sampling schemes have languished at low numbers of photons for years because they are incredibly difficult to scale up. To preserve the sensitive quantum arrangement, the photons must remain indistinguishable. Imagine a horse race where the horses all have to be released from the starting gate at exactly the same time and finish at the same time as well. Photons, unfortunately, are a lot more unreliable than horses.

As photons in Jiŭzhāng travel a 22-meter path, their positions can differ by no more than 25 nanometers. That is the equivalent of 100 horses going 100 kilometers and crossing the finish line with no more than a hair’s width between them, Lu says.

QUANTUM QUESTING

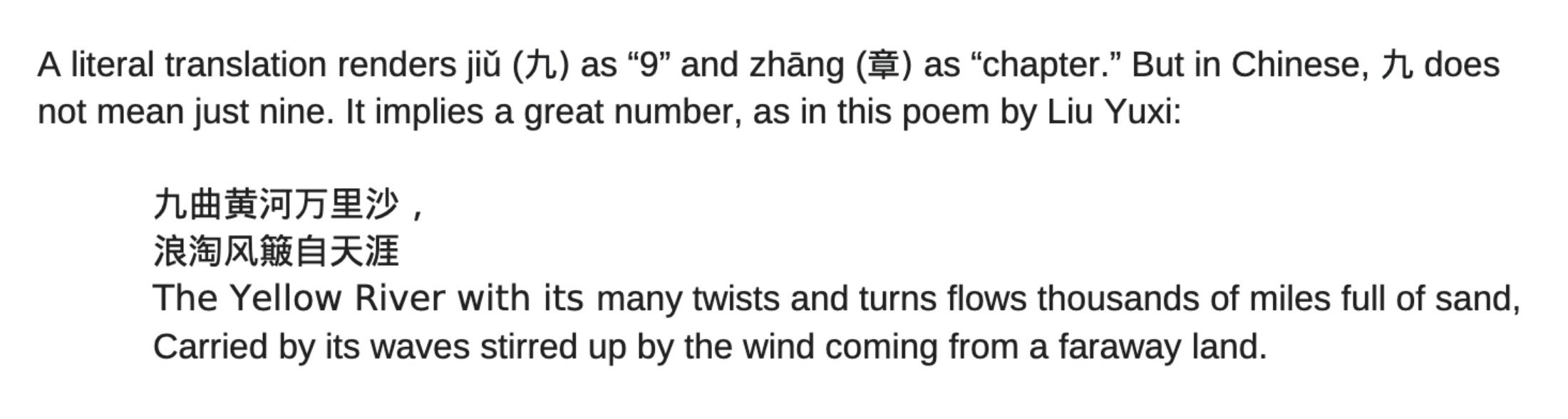

The USTC quantum computer takes its name, Jiŭzhāng, from Jiŭzhāng Suànshù, or “The Nine Chapters on the Mathematical Art,” an ancient Chinese text with an impact comparable to Euclid’s Elements.

Quantum computing, too, has many twists and turns ahead. Outspeeding classical computers is not a one-and-done deal, according to Lu, but will instead be a continuing competition to see if classical algorithms and computers can catch up, or if quantum computers will maintain the primacy they have seized.

Things are unlikely to be static. At the end of October, researchers at the Canadian quantum computing start-up Xanadu found an algorithm that quadratically cut the classical simulation time for some boson sampling experiments. In other words, if 50 detected photons sufficed for quantum primacy before, you would now need 100.

For theoretical computer scientists like Aaronson, the result is exciting because it helps give further evidence against the extended Church-Turing thesis, which holds that any physical system can be efficiently simulated on a classical computer.

“At the very broadest level, if we thought of the universe as a computer, then what kind of computer is it?” Aaronson says. “Is it a classical computer? Or is it a quantum computer?”

So far, the universe, like the computers we are attempting to make, seems to be stubbornly quantum.

ABOUT THE AUTHOR

Daniel Garisto

Daniel Garisto is a freelance science journalist covering advances in physics and other natural sciences. His writing has appeared in Nature News, Science News, Undark, and elsewhere.